sunfluidh:domain_features_namelist

Ceci est une ancienne révision du document !

Table des matières

Domain_Features

This data set defines the domain size, the grid data, the domain decomposition features (MPI parallelisation characteristics : number of MPI processes bounded to subdomains and how they are distributed over the domain) and the number of threads also used to split the domain (OpenMP parallelization).

Full data set of the namelist

&Domain_Features Geometric_Layout = 0,

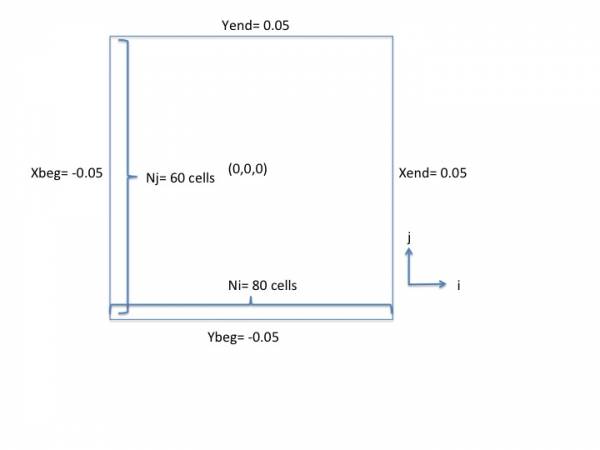

Start_Coordinate_I_Direction =-0.05 ,

End_Coordinate_I_Direction = 0.05,

Start_Coordinate_J_Direction =-0.05 ,

End_Coordinate_J_Direction = 0.05,

Start_Coordinate_K_Direction = 0.00 ,

End_Coordinate_K_Direction = 0.00,

Cells_Number_I_Direction = 80 ,

Cells_Number_J_Direction = 60 ,

Cells_Number_K_Direction = 1,

Number_OMP_Threads = 1,

MPI_Cartesian_Topology = .false. ,

MPI_Graphic_Topology = .false. ,

Total_Number_MPI_Processes = 1,

Max_Number_MPI_Proc_I_Direction= 1 ,

Max_Number_MPI_Proc_J_Direction= 1,

Max_Number_MPI_Proc_K_Direction= 1,

Regular_Mesh = .true. /

In decomposition domain approach (MPI parallelization), the number of cells (Cells_Number_I_Direction, Cells_Number_J_Direction, Cells_Number_K_Direction) is related to each subdomain , not the whole domain.

Find some examples here .

Definition of the data set

Geometric_Layout

- Type : integer value

- This option selects the type of geometry configuration used :

- 0 : Cartesian geometry

- 1: Cylindrical geometry. The axis is oriented along the K-direction. The coordinate system is $r(i), \theta(j) , z(k)$

- 2: Cylindrical geometry. The axis is oriented along the I-direction. The coordinate system is $r(j), \theta(k) , z(i)$

- 3: Cylindrical geometry. The axis is oriented along the J-direction. The coordinate system is $r(k),\theta(i),z(j)$

- Default value = 0

Start_Coordinate_I_Direction

- Type : real value

- Origin coordinate along the I-direction.

- Default value must be set by the user

Start_Coordinate_J_Direction

- Type : real value

- Origin coordinate along the J-direction.

- Default value must be set by the user

Start_Coordinate_K_Direction

- Type : real value

- Origin coordinate along the K-direction.

- Default value must be set by the user

End_Coordinate_I_Direction

- Type : real value

- End coordinate along the I-direction.

- Default value must be set by the user

End_Coordinate_J_Direction

- Type : real value

- End coordinate along the J-direction.

- Default value must be set by the user

End_Coordinate_K_Direction

- Type : real value

- End coordinate along the K-direction.

- Default value must be set by the user

Cells_Number_I_Direction

- Type : integer value

- Number of cells along the I-direction (excluding the ghost-cells).

In decomposition domain approach (MPI parallelization), the number of cells is related to each subdomain , not the whole domain. - Default value= 0

Cells_Number_J_Direction

- Type : integer value

- Number of cells along the J-direction (excluding the ghost-cells).

In decomposition domain approach (MPI parallelization), the number of cells is related to each subdomain , not the whole domain. - Default value= 0

Cells_Number_K_Direction

- Type : integer value

- Number of cells along the K-direction (excluding the ghost-cells)

In decomposition domain approach (MPI parallelization), the number of cells is related to each subdomain , not the whole domain. - Default value= 0

Number_OMP_Threads

- integer value

- Number of Threads for OpenMP parallelization

- Default value= 1

MPI_Cartesian_Topology

- Type : Boolean value

- Select the MPI cartesian topology for the domain decomposition method (same number of subdomains along a given direction)

- Default value= .false.

MPI_Graphic_Topology

- Type : Boolean value

- Select the MPI graphic topology for the domain decomposition method (the number of subdomain along a given direction is variable)

- Default value= .false.

Total_Number_MPI_Processes

- Type : integer value

- Total number of MPI processes used in the domain decomposition method

- Default value= 1

Max_Number_MPI_Proc_I_Direction

- Type : integer value

- Number of MPI processes along the I-direction (maximum number for the graphic topology)

- Default value= 1

Max_Number_MPI_Proc_J_Direction

- Type : integer value

- Number of MPI processes along the J-direction (maximum number for the graphic topology)

- Default value= 1

Max_Number_MPI_Proc_K_Direction

- Type : integer value

- Number of MPI processes along the K-direction (maximum number for the graphic topology)

- Default value= 1

Regular_Mesh

- Type : boolean value

- if .true., the mesh size is regular along each direction and the gris is directly built by the code.

- If .false., the grid is irregular and the cell distribution is read in the specific files maillx_xxxxx.d, mailly_xxxxx.d and maillz_xxxxx.d (xxxxx corresponds to the subdomain/MPI-process number if the MPI domain-decomposition is used). These files are created from the mesh builder named meshgen.x.

- Default value= .true.

Traductions de cette page:

- fr

sunfluidh/domain_features_namelist.1479895707.txt.gz · Dernière modification : 2016/11/23 11:08 de yann